Our Universe Is A Massive Neural Network: Here’s Why

Our Universe Is A Massive Neural Network: Here’s Why

Some days ago, I read an article on arXiv from Vitaly Vanchurin about ‘The world as a neural network’.

My first thought was it will be something about the simulation hypothesis but after some reading, I saw it’s not really about it. Nowadays, in physics, the most popular theory is string theory (or theories because there are many).

According to the string theory space has 9 (or more) dimensions and the most fundamental object is the superstring. These strings are vibrating in this high-dimensional space and every particle in the Universe is a vibration of a superstring.

In Vanchurin’s theory, the most fundamental object is a neuron and the Universe can be described as a neural network. Neural networks are trainable mathematical structures inspired by the human brain. A neuron is a simple processing unit usually described by a simple mathematical function.

A neural network is a graph of these neurons and it depends on the strength of the connections (weights) what it does. So, a neural network is something like a computer that can be programmed by the weights. Vanchurin shows in his article that the Universe can be described as a neural network, and he can get back the quantum mechanics and the general relativity from it.

So, a neural network is at least as good a model of our Universe as string theory or any others. The article is full of math, and it’s hard to read if you are not a physicist or a mathematician, but this is the point in a nutshell.

Vanchurin’s theory is very impressive but important to note that in this model the neural network is not a “thinking machine”, it does not recognize patterns, etc. as we use them in computer technology, he is using it as a mathematical model of particles.

But what if the particles and even space and time are not fundamental?

The non-fundamentality of space-time is something weird, but not a new thing. Donald Hoffman is a big prophet of the theory of the “conscious Universe”. In his theory, the most fundamental thing is the conscious agent, and space-time and the particles are only emergent properties of the conscious experience.

If you are interested in Hoffman’s theory, then watch his TED talk, or these interviews with him

At the end of the 19th century, Einstein has given a new definition of space and time, which were untouchable fundamental properties of reality. He assumed that the speed of light must be equal for every observer, and it is a more fundamental law of physics than the independence of the time.

This was the base of special relativity. Another example is quantum mechanics where quantum nonlocality suggests space-time is something different from what we experience in everyday life.

Although Hoffman’s theory of space and time is more radical, these cases are good examples that sometimes questioning fundamental things can be a base of a good theory.

In Hoffman’s theory, the fundamental reality is a hierarchical structure of conscious agents, and the whole experienced reality with space and time is something like a “headset” on our conscious mind, so it’s very close to the simulation hypothesis.

Some years ago I wrote an essay about ‘How to build simulated realities?!’. In this essay, I tried to imagine a future, where mind uploading is real, and I asked the question: What would be the optimal way for creating simulated realities for these minds.

The first time I read about mind uploading was in Ray Kurzweil’s famous book The Singularity is near. Mind uploading is the full digitalization of the human brain. A technology where the brain is copied neuron by neuron and simulated in a digital computer. If the human mind is the result of the brain function then this simulated copy will be totally identical to us. So, if mind uploading is possible, how could we create an optimal simulated reality for these digitized minds?

The first solution is simulating reality particle by particle, but it is very wasteful and needs an incredibly huge amount of computing capacity.

Then what can be done?

We can do what every computer game does, render only what the user sees. Since we have 5 senses, rendering the visual experience is not enough, smells, sounds, etc. are needed, but the model is the same: it has to be rendered only what is observed by somebody.

Some interpretations of quantum mechanics say something similar about reality. In these interpretations only the observed things are real. But this “trick” doesn’t solve the problem of computing capacity because the simulated reality will be consistent only if particles are also simulated when they are not observed.

For example, if I hold an apple in my hand and I close my eyes, the apple should be there when I open my eyes again. In quantum mechanics, the wave function describes the particles when nobody observes them, and it collapses to a real object when the observer observes it.

Simulating the wave function would also need a huge (nearly infinite) computing capacity. Fortunately, there is another “trick” to solve this problem. If the goal is simulating reality to an observer then it’s enough to consider the observer’s expectations. If we stick to the apple example when I open my eyes I expect that the apple will be there.

So, if the system knows my expectations it can simulate a fully consistent reality to me. Rendering the expectations is something like projecting reality. The human brain is doing this day by day. We are always perceiving only pieces of reality and our brain adds the missing parts to it. But what if these parts of reality are not coming from outside but from the projection of another mind?

If you are interested in how our brain projects reality, watch Anil Seth’s TED talk about the topic.

My hypothetical simulation system is not simulating anything, only “merging” the projections of the different minds to create consistent reality from it, this is why I call it the “consistency machine”.

The consistency machine collects the projections from the individual uploaded minds, merges them, and renders the consistent reality to them. The consistency machine doesn’t need an external memory, and it doesn’t have to simulate any particle. It only renders the stimuli for the 5 senses from the merged projections.

But what if the consistency machine cannot merge the projections because there is a basic contradiction between them? In this case, the consistency machine has to change the expectations to synchronize them. Expectations of the individual mind came from past experiences, so manipulating minds and changing expectations is something like time travel.

The consistency machine “goes back in time” and changes the events to synchronize the expectations. This is also an ultimate solution to protect the simulation. If somebody could prove that we are living in a simulation, the consistency machine could go back in time and patch the “security hole”. So, in this system, you will never be able to prove it is a simulation.

In 2012, Ray Kurzweil published a theory about how the human mind works. In this theory, the neocortex of the human brain is built from pattern recognizer modules.

A module is a bunch of neurons and it is able to recognize a pattern. These modules are interconnected in a hierarchical structure. The low-level modules recognize primitive patterns and send signals forward to the higher-level modules, and higher-level modules could also send signals to lower-level modules to activate them.

In this model thinking is something like an associative chain of pattern recognizer activations. In many cases, the pattern recognizers are competing with each other and it’s decided on the higher level which module wins.

In this model, the conscious experience is the result of this competition on the top level of the hierarchy. This hierarchical model is able to ensure consistency on the top level. If there is inconsistency on any level, the pattern recognizer sends signals to the lower level modules to block them or change the lower level activations.

Kurzweil’s pattern recognizers are something like Hoffman’s conscious agents. The difference is that Hoffman’s agents are more abstract fundamental things, while Kurzweil’s recognizers are primitive modules that are built from a bunch of neurons.

Both theories claim that human consciousness is the result of the hierarchy. The hierarchical network of modules that results in the consistent conscious experience is very similar to the consistency machine. What if the individual mind (the self) is not on the top level, only a middle-level structure in the module hierarchy?

In this model, the top of the hierarchy is not the individual mind, but a top-level global consciousness that contains the individual minds and keeps the consistency of the reality experienced by them. It is something like a giant brain that has multiple personalities.

Although the multiple personalities sound weird, it is not as unusual as you think. One of Hoffman’s favorite examples is the split-brain experiments. Corpus callosotomy is a surgical procedure for the treatment of medically refractory epilepsy.

In this procedure, the corpus callosum is cut to limit the spread of epileptic activity between the two halves of the brain. After the procedure in many cases, the patient feels like he or she has two personalities.

These experiments suggest that every human brain is built from two conscious entities, but if the two halves of the brain are connected then they form one consistent personality.

In the global consciousness model, the individual minds are interconnected in the same way, which is experienced by the individual minds as the consistent reality. In this model, space, time, particles, and every element of reality is the result of the interconnection of the minds.

If space-time and particles are only a result of the interconnected minds then what can be said about the “outer” objective reality? Nearly nothing. But if we assume that the global consciousness is built from abstract neuron-like mathematical structures then we can compare it to the current physical model.

In the state of the art model of the physical world, the Universe arose from nothing at the Bing Bang, and the most fundamental building block of it is the superstring.

Superstrings are high-dimensional mathematical structures that form particles. Every particle can be described by a state vector, and physical laws are operators on these vectors. As time goes on these operators continuously transform the state vectors.

Consciousness is the result of evolution and the reason for its formation is the anthropic principle. The anthropic principle is a simple, elegant answer to why our Universe exists, and why is it fine-tuned for conscious intelligent life.

Possibly there are many universes in the multiverse but in the universes which are not fine-tuned for conscious intelligent life there is no one to ask “why is my universe fine-tuned?”. The multiverse is playing a lottery game, and our universe won the jackpot: us.

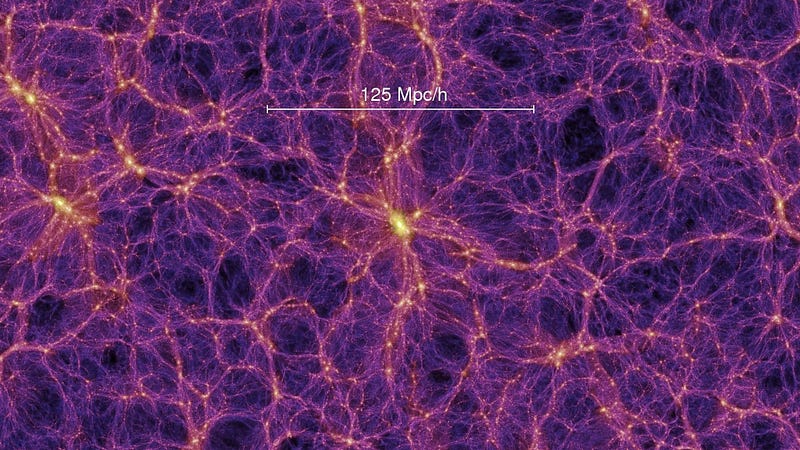

A neural network Universe is very similar. It could also arise from nothing, but it is built from neurons instead of particles. On the lowest level neurons and particles are very similar.

Both of them are mathematical structures described by a state vector which is continuously transformed by the neural laws through the neural interactions.

Possibly there are many empty neural universes but some of them are suitable for some kind of evolution and conscious entities developed in it.

Is this type of Universe a correct model of our current Universe? It can be, and as described above possibly we can never be able to prove or refute that our Universe is a neural network or not.

What can be done with such a theory? If Hoffman is right and space-time is not fundamental then maybe we can somehow “hack it” as Hoffman said.

If he is not right and consciousness is a result of the brain function and can be explained by physical laws then we can be able to simulate it and build our own realities in the future.

So the right question is: “When will we be living in a simulation or are we already living in it?”