Why Tesla’s Optimus Is a Big Step on the Way to AGI

Why Tesla’s Optimus Is a Big Step on the Way to AGI

You probably heard about the scandal around Google’s AI, LaMDA. In a nutshell, one of the members of Google’s ethical team claimed that this chatbot is conscious, and it has basic human rights. But is LaMDA really conscious?

As the famous GPT-3, LaMDA is also based on transformer architecture. A transformer is basically a statistical model that is able to make word predictions based on the huge amount of data that is fed into it. So, as Gary Marcus said:

GPT is autocomplete on steroids but it gives the illusion that it’s more than that.

But this is only one side of the coin. The other side of it is that many scientists think that consciousness is an illusion. Our actions are basically unconscious, and the conscious experience is only the story of our brain about what happened. So, “Is LaMDA conscious?” is a very exciting question, but there is a more exciting one:

Are we conscious?

LaMDA doesn’t have any sensory input. It is blind and deaf. It cannot taste, touch or smell anything. Does a baby, who is born with all of these defects, have any chance to be an intelligent being? Will he or she be conscious?

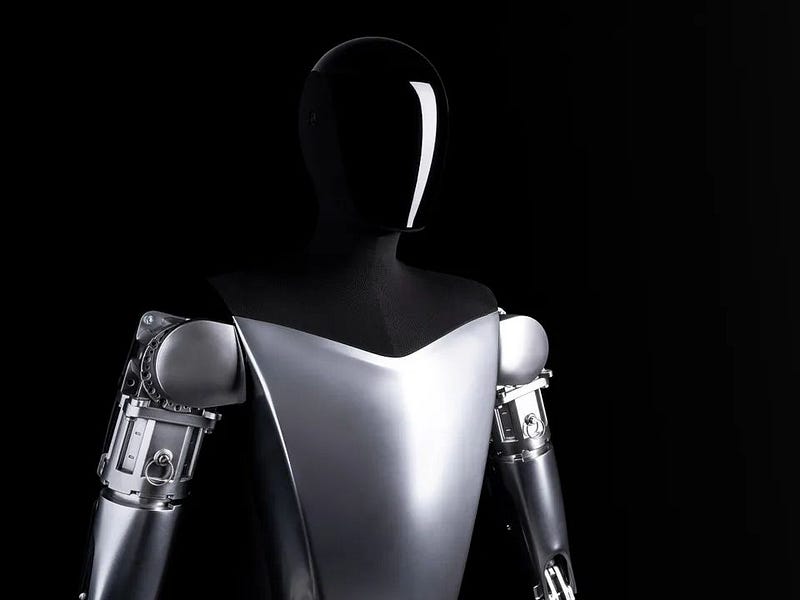

For me, “water” is blue, “water” is cold, “water” is the taste of water, and the touch of water. For LaMDA “water” is only a symbol without any meaning. It cannot really “understand” water without these sensory inputs, and because of this, I think it cannot be really conscious. But what if LaMDA gets a body?

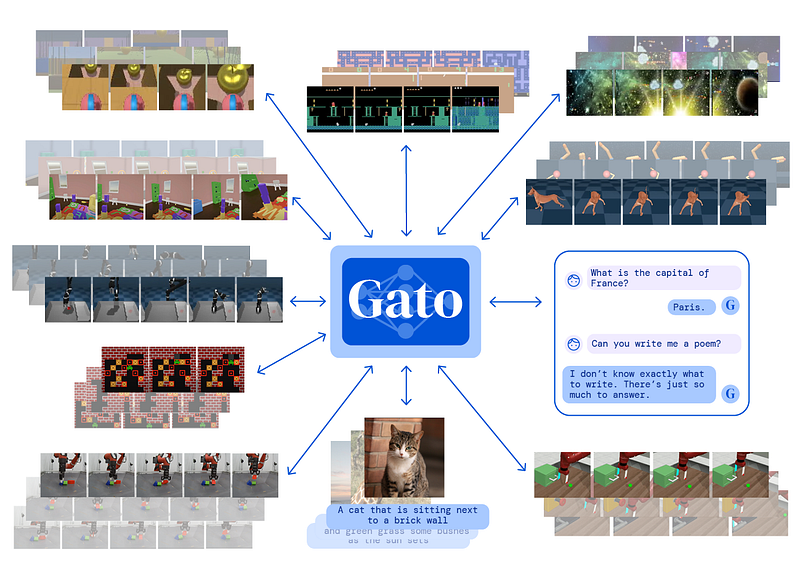

LaMDA is a large language model. Is this kind of model able to control a whole body? The question is already answered by DeepMind. Gato is the name of the Generalist Agent of DeepMind that builds upon the same transformer architecture as LaMDA. This generalist agent can chat with you, caption images, or control robotic arms with the same neural-network architecture and weights. So, if your model is large enough, it will be able to control a robot that not only chats, but moves and act like a human.

These large language models are trained with a huge amount of text that can be easily crawled from the Internet, but how can we train a robot?

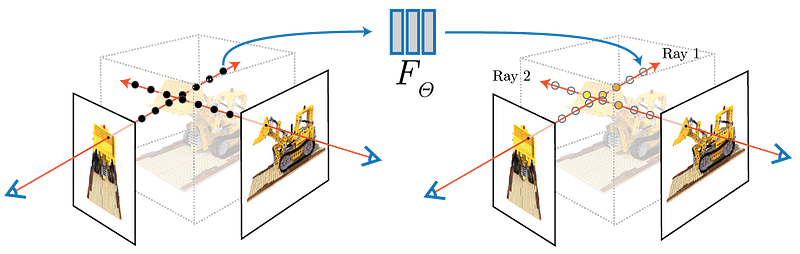

My first idea is films and video content from social networks and the Internet. We have a huge amount of 2D data that can be converted to 3D by using NeRF. So watching movies could be a good source for a robot to learn how to act like a human. Obviously, I hope it will skip watching the Terminator, and movies like it…

My second idea is the metaverse. The metaverse can be the largest source of big data about real human interactions. It is real 3D, and the robot can experience everything from the users’ point of view.

The third and best source would be AR devices. Glasses that we wear all day and that record everything while it projects a second layer to the real world would be the best source of big data for robot training.

And there is a very exciting opportunity. What if we train a robot with a specific person’s experiences that are collected by his smart glasses? If we have enough data, the robot will act like him, and after the death of the person, the robot can live with us. It is a form of digital immortality.

Training these models needs huge computing capacity and room-sized GPU clusters, so training locally is not possible. But it’s not needed. The basic network can be trained on these large clusters and only a small part of the neural network will be trained by the customer. This is very similar to how nature works. Our brains are pre-wired by evolution. As Karpathy told in an interview, when a zebra is born, it can run immediately. Running is not learned it’s pre-wired in the brain. In the same way, our robots will be pre-wired, and only a small neural network on the top of the pre-wired part will be trainable, that can be trained locally.

And the final question: “Will these robots be conscious?”

I don’t think so, but they will act like humans, and they will do it better and better.

Becoming conscious will be a long process. When it will happen, robots will be a standard part of our life, and we might not even realize when they became conscious, because it’s hard to distinguish real and fake consciousness. If “real” consciousness really exists (whatever that means)…

You can love or hate Elon and his companies, but I think human robots like Optimus are a necessary next step to achieve real human-level AGI.

Subscribe to DDIntel Here.

Visit our website here: https://www.datadriveninvestor.com

Join our network here: https://datadriveninvestor.com/collaborate

Apply code: DDI2022 for a 30% OFF for Black Friday SALE.